A Software Engineer Journey Through Web 2.0, Cloud, Microservices, Containers, and LLM

Next year will mark my two decades of working in the software industry. This post is to reflect at a high level how I have seen the software development landscapes have changed from a technology perspective. During these years I have worked in startups, non-governments and big tech companies (such as Microsoft ) living in four different countries. I also co-founded an edtech startup in South Asia.

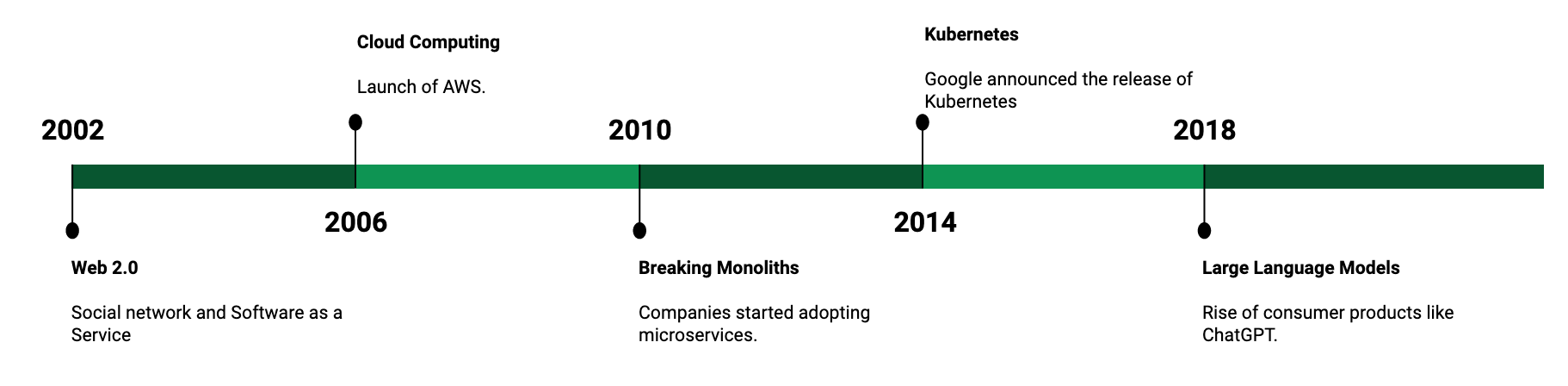

I think there were five major milestones during these periods namely web 2.0, cloud computing, microservices and devops, containers and large language models.

Web 2.0

As the internet infrastructure became more faster, reliable and ubiquitous, the web transitioned from static contents to dynamic and user centered. Social networks and ecommerce platforms started mushrooming. LinkedIn, Facebook, Flickr were created during this time. Traditional companies started building their presence on the internet adopting Software as a Service (Saas) model.

My first experience working in Web 2.0 was to build a CRM website using technologies like HTML, CSS, Javascript, XML to basically support CRUD operations for the business domain. This is quite primitive nowadays with most boilerplate code generated by framework if not provided out of the box but back then everything had to be implemented from scratch from making a http call to database, fetching the results as XML, parsing the response to display on the UI etc. Quite a lot of work just from the development side. Then there was separate work to deploy and host it on the bare metal box rented in one of the data centers! I think many of the young readers may even find this incomprehensible.

Cloud computing

Before Amazon started offering its AWS cloud platform to outside developers most small and medium companies had to depend upon a vendor like IBM to host their applications to serve the end user through their data center. It was one of the major upheavals in the software industry as companies saw the opportunity to scale their apps based on the demand and traffic the same way that Amazon was able to reach out to millions of customers in almost every geography.

One such company was Bubble Motion that was funded by Sequoia Capital where I worked during 2012/13. It had fully leveraged AWS cloud offerings to serve a customer base closer to their geographic presence enabling faster delivery and low latency.

On top of cloud infrastructure, other companies like Heroku started offering even easier options to host applications and databases. This was later coined as Platform as a Service (PaaS.) Combined with a bootstrap framework like Ruby on Rails, startups found it frictionless to build and reach out to customers within the span of a few months with their first Minimum Viable Products (MVP.)

I bootstrapped my first company and released the first iteration of an educational platform that allowed students in Nepal to search courses and colleges for higher education. We developed it from scratch using Ruby on Rails with Postgres on Heroku integrated with stripe payment apis within the span of less than 6 months with a small team of 4 Engineers.

Microservices and Devops

Service Oriented Architecture (SOA) was a common approach to design and develop services before microservices became more prominent and adopted by engineering teams.

While SOA was mostly based on XML protocols, developers found it easy and lightweight to use HTTP with JSON to build services focused on performing individual tasks. It also enabled smaller teams to take ownership and deploy end to end products using devops.

Companies like Netflix and Amazon were the early pioneers in this space. They started open sourcing many tools that made it easier for others to adopt the microservice and devops practice including continuous integration and delivery.

While attending Greater Atlanta Software Symposium in 2009, there were already sessions and workshops to reflect on how SOA had failed to its expectation and REST was quickly becoming a more mainstream choice for software integrations. Until then, microservices term was not coined but the adoption had already started.

Containers and Kubernetes

Companies continued to break down their monoliths to create hundreds of microservices. It became challenging to orchestrate those large numbers of services to automate the workflow for deployments.

Containerization such as using Docker allowed developers to package applications and their dependencies into isolated containers. Containers provided consistency across different environments, making it easier to develop, test, and deploy applications. Kubernetes provided a robust solution for automating the deployment, scaling, and management of containerized workloads.

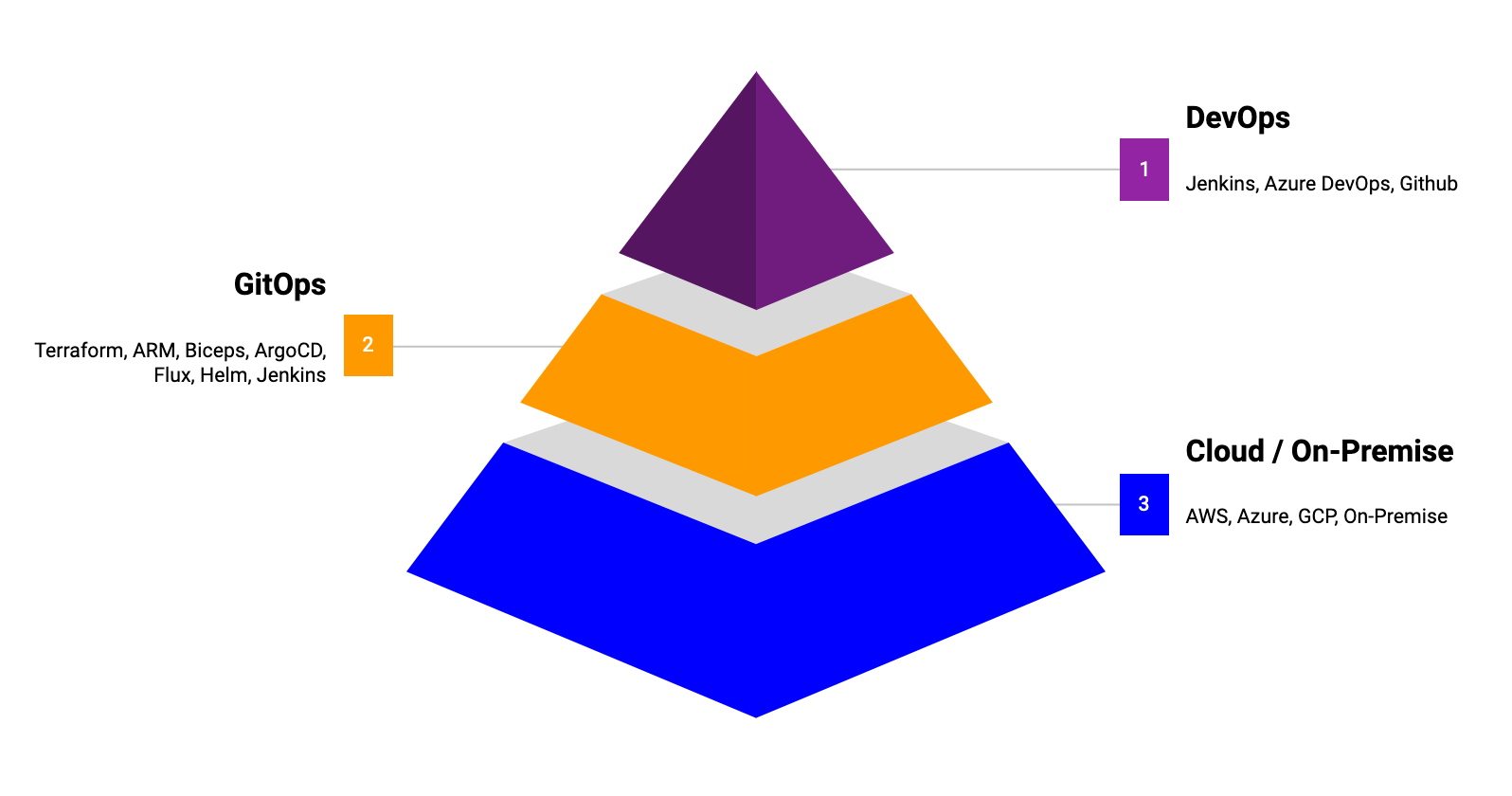

In addition to GCP, other major cloud providers like AWS and Azure started providing Kubernetes as a managed service without having to set up and manage on premise. A whole new industry has evolved to enable packaging, deployment and delivery of infrastructure. Platform engineering team is common among large companies to provide such services across the organizations.

Companies are adopting Infrastructure as a Service (IaaS) using Terraform, ARM, Bicep to define the state of its resources. Similar to DevOps, they use GitOps practice for infrastructure using framework like Flux for automatic convergence and Helm for package management to deploy to Kubernetes cluster.

Large Language Models (LLM)

The use of Artificial Intelligence and Machine Learning was limited to academia and a handful of research labs and projects until a few years back. This changed when ChatGPT was launched by OpenAI as an MVP product. It opened up the possibility to leverage AI and ML for consumer products.

Although the actual usage of LLM is still limited to a day to day product we use, it has immense potential to become a huge dividing factor for product development. It is due to this reason, there has been an exponential growth of investments into LLM by venture capitalists, corporations as well as governments.

While working at Microsoft in early 2023, I had an opportunity to work within the office organizations on some of the early iterations of integrating Open AI with office products and the infrastructure to scale. The team I had joined started around the 2020s as a green field project to improve engineering documentation through ideas such as improving semantic search, workflows and apis. However with the sudden rise of LLMs it completely flipped the team to quickly ideate the product to think engineering documentation from a different perspective mostly leveraging the prompt engineering.

Most hackathons at Microsoft used to be quiet but the one early this year after the company announced integrating the Open AIs with several of its products including Bing search engine had put some fuel into the company. Every team had a pressure to leverage Open AI and integrate into their product. I remembered one of the VP of Engineering clearly mandating not to put buttons everywhere on the UIs for the sake of it but to make it more seamless from user experience!

My team built the first MVP of Visual Studio plugins to generate documentation from the project source code and meta data using Open AI and prompt engineering. We had to think about software design and development from a different perspective such as creating dynamic prompts, optimizing tokens in api calls, chaining the results and reasoning.

LLMs is going to have a huge impact in software development in the coming years in all areas like infrastructure, databases and hardwares. We will see more customized versions of GPUs and computer memories based on the use case, storage to save new data models different from SQLs and NoSQLs and development environments to support building Software 2.0.

Conclusion

Technology will continue to evolve in the future and software engineers need to understand them to make an informed decision ( on where to make tradeoffs.) Then only will they be able to influence to solve the business problem in the most meaningful way.

( I will be writing a separate post in the future to delve into each of these major topics and events. Stay tuned!)